Downloads: Windows:

x64

Arm64

| Mac:

Universal

Intel

silicon

| Linux:

deb

rpm

tarball

Arm

snap

Welcome to the April 2024 release of Visual Studio Code. There are many updates in this version that we hope you'll like, some of the key highlights include:

If you'd like to read these release notes online, go to

Updates

on

code.visualstudio.com

.

Insiders:

Want to try new features as soon as possible? You can download the nightly

Insiders

build and try the latest updates as soon as they are available.

Accessibility

Progress accessibility signal

The setting,

accessibility.signals.progress

, enables screen reader users to hear progress anywhere a progress bar is presented in the user interface. The signal plays after three seconds have elapsed, and then loops every five seconds until completion of the progress bar. Examples of when a signal might play are: when searching a workspace, while a chat response is pending, when a notebook cell is running, and more.

Improved editor accessibility signals

There are now separate accessibility signals for when a line has an error or warning, or when the cursor is on an error or warning.

We support customizing the delay of accessibility signals when navigating between lines and columns in the editor separately. Also, aria alert signals have a higher delay before playing them than audio cue signals.

Inline suggestions no longer trigger an accessibility signal while the suggest control is shown.

Accessible View

The Accessible View (

⌥F2

(Windows

Alt+F2

, Linux

Shift+Alt+F2

)

) enables screen reader users to inspect workbench features.

Terminal improvements

Now, when you navigate to the next (

⌥↓

(Windows, Linux

Alt+Down

)

) or previous (

⌥↑

(Windows, Linux

Alt+Up

)

) command in the terminal Accessible View, you can hear if the current command failed. This functionality can be toggled with the setting

accessibility.signals.terminalCommandFailed

.

When this view is opened from a terminal with shell integration enabled, VS Code alerts with the terminal command line for an improved experience.

Chat code block navigation

When you're in the Accessible View for a chat response, you can now navigate between next (

⌥⌘PageDown

(Windows, Linux

Ctrl+Alt+PageDown

)

) and previous (

⌥⌘PageUp

(Windows, Linux

Ctrl+Alt+PageUp

)

) code blocks.

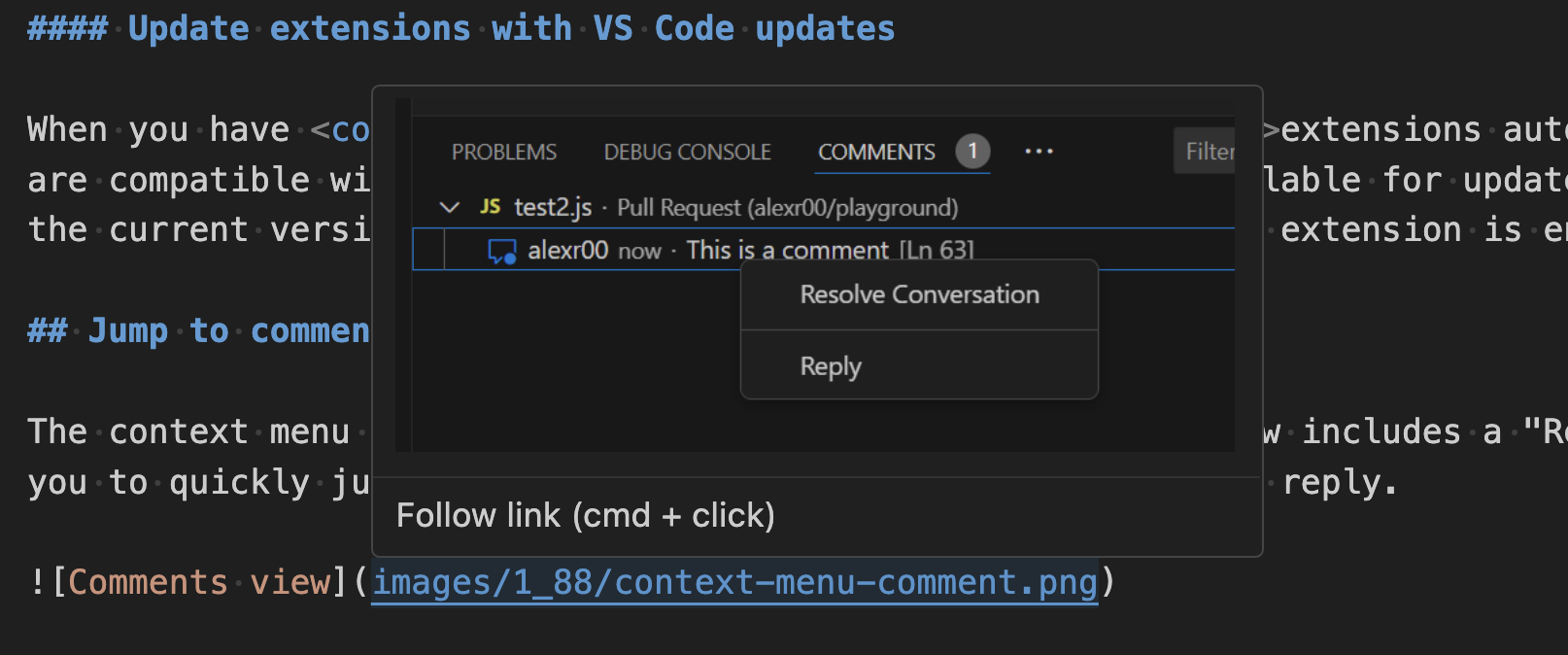

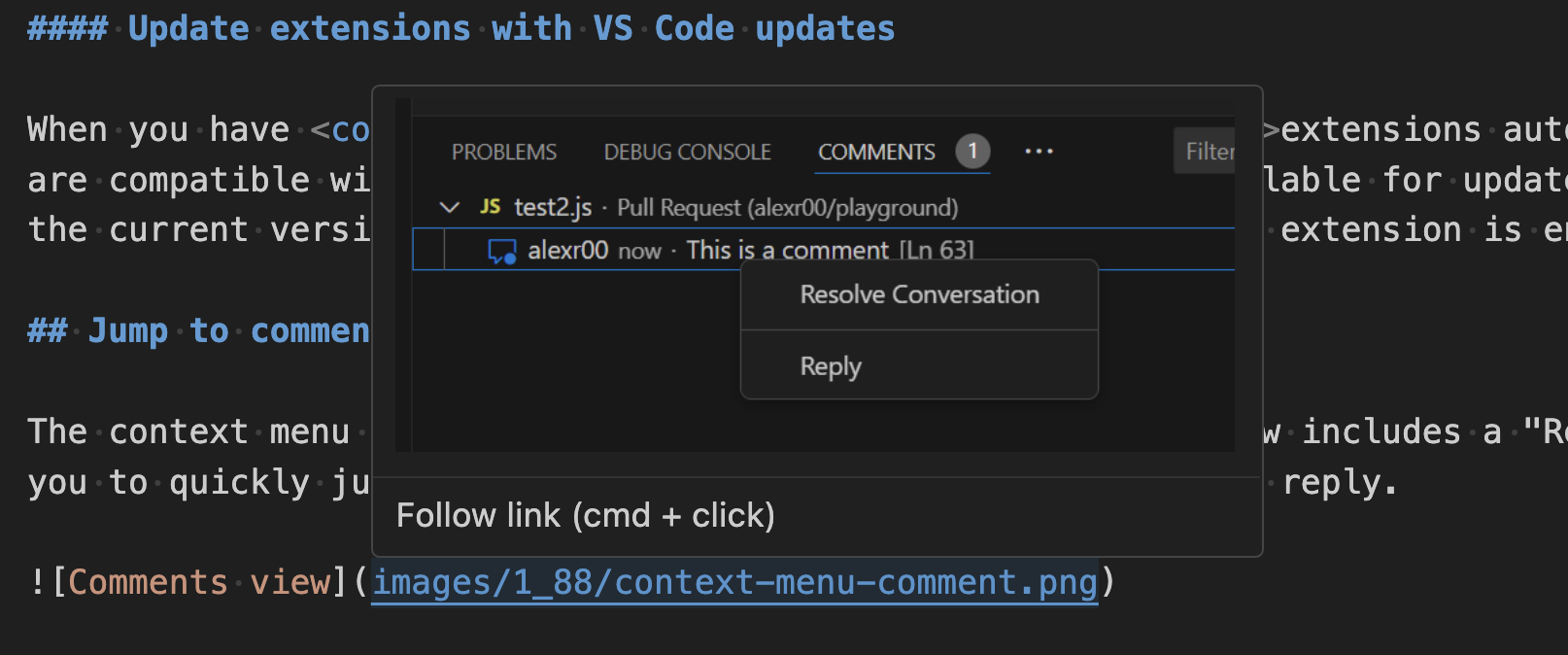

When there is an extension installed that is providing comments and the Comments view is focused, you can inspect and navigate between the comments in the view from within the Accessible View. Extension-provided actions that are available on the comments can also be executed from the Accessible View.

Workbench

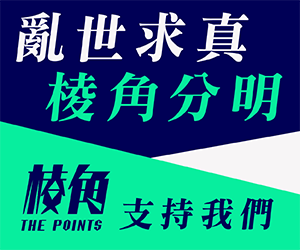

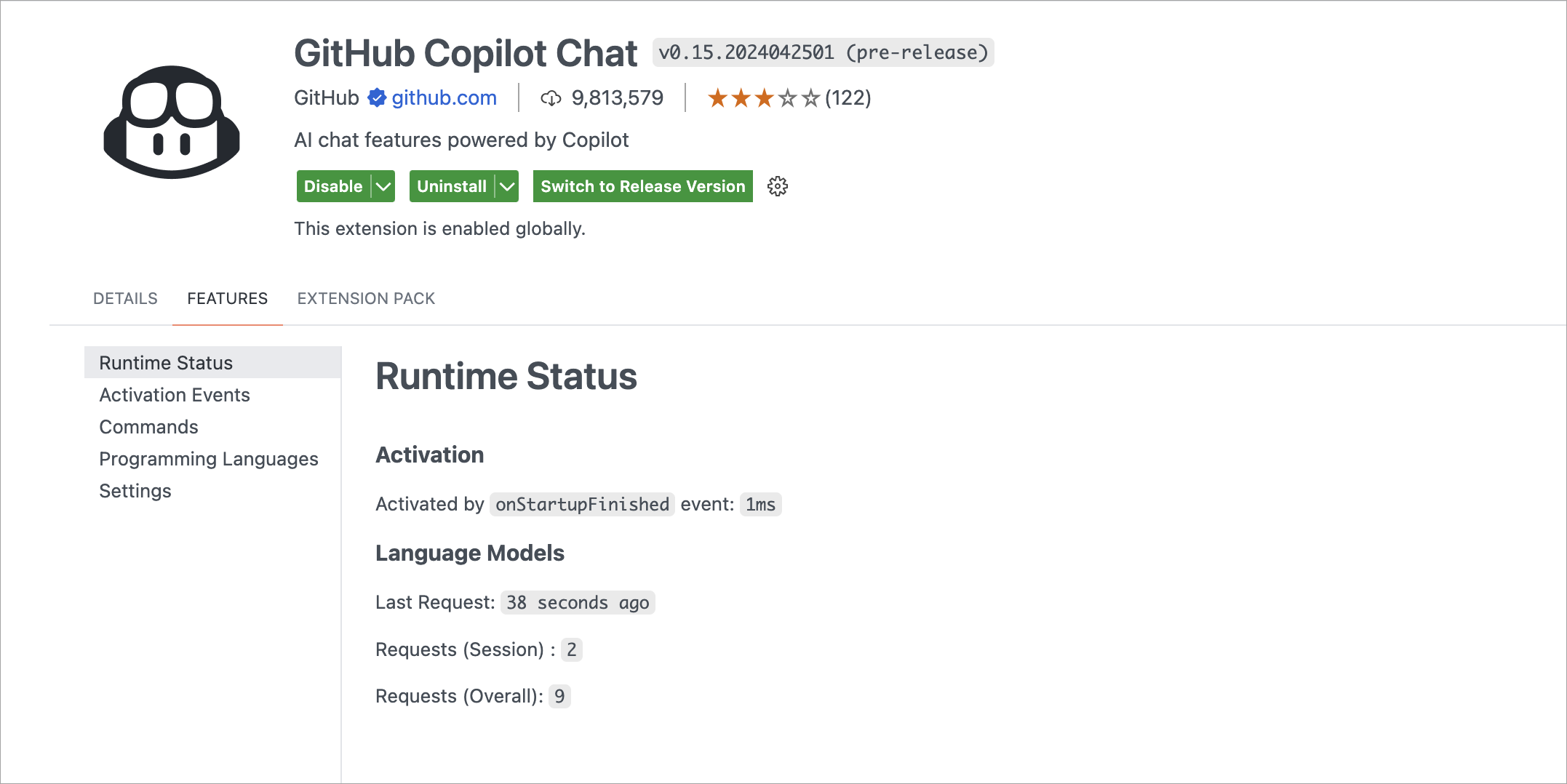

Language model usage reporting

For extensions that use the language model, you can now track their language model usage in the Extension Editor and Runtime Extensions Editor. For example, you can view the number of language model requests, as demonstrated for the Copilot Chat extension in the following screenshot:

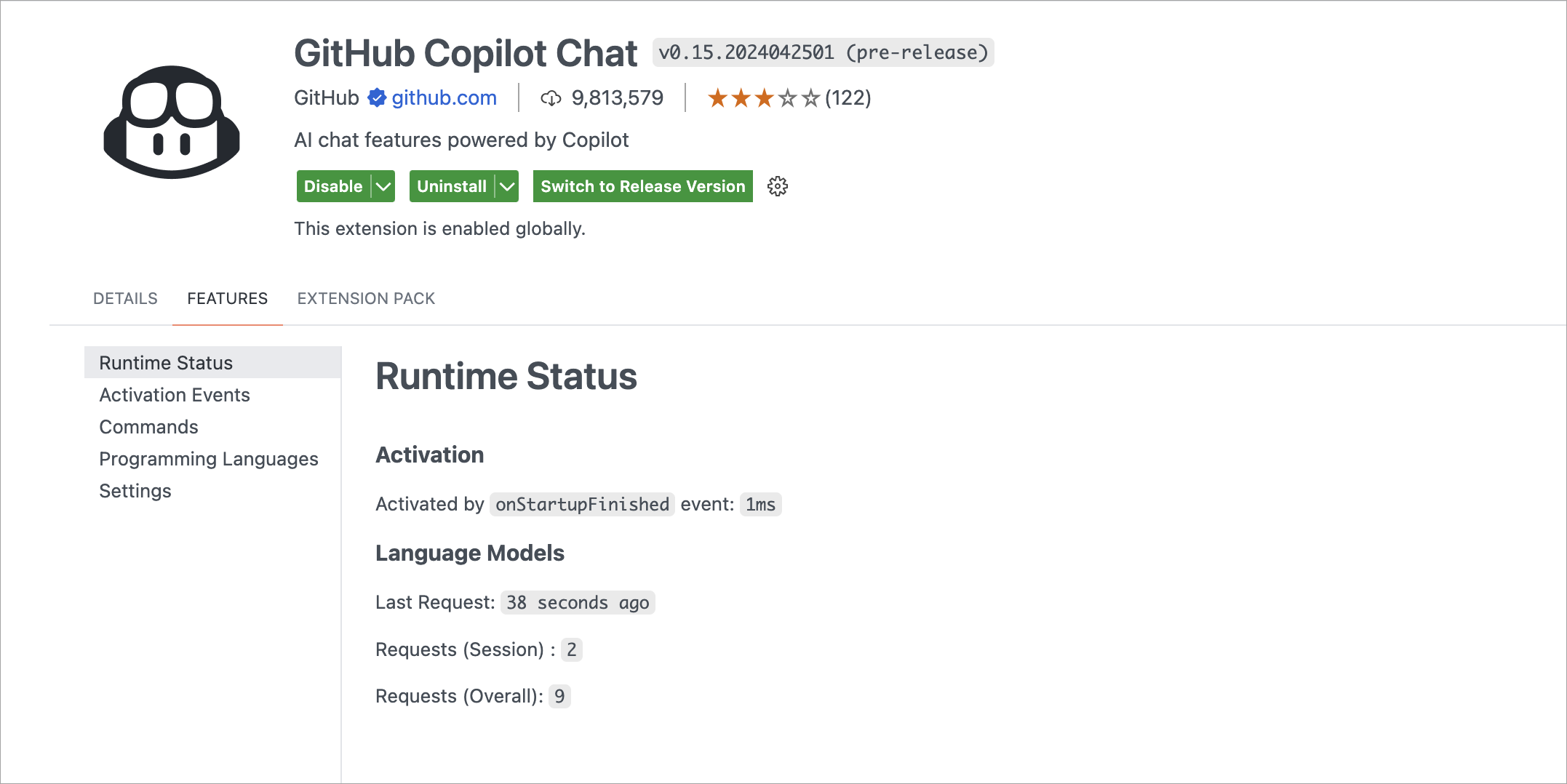

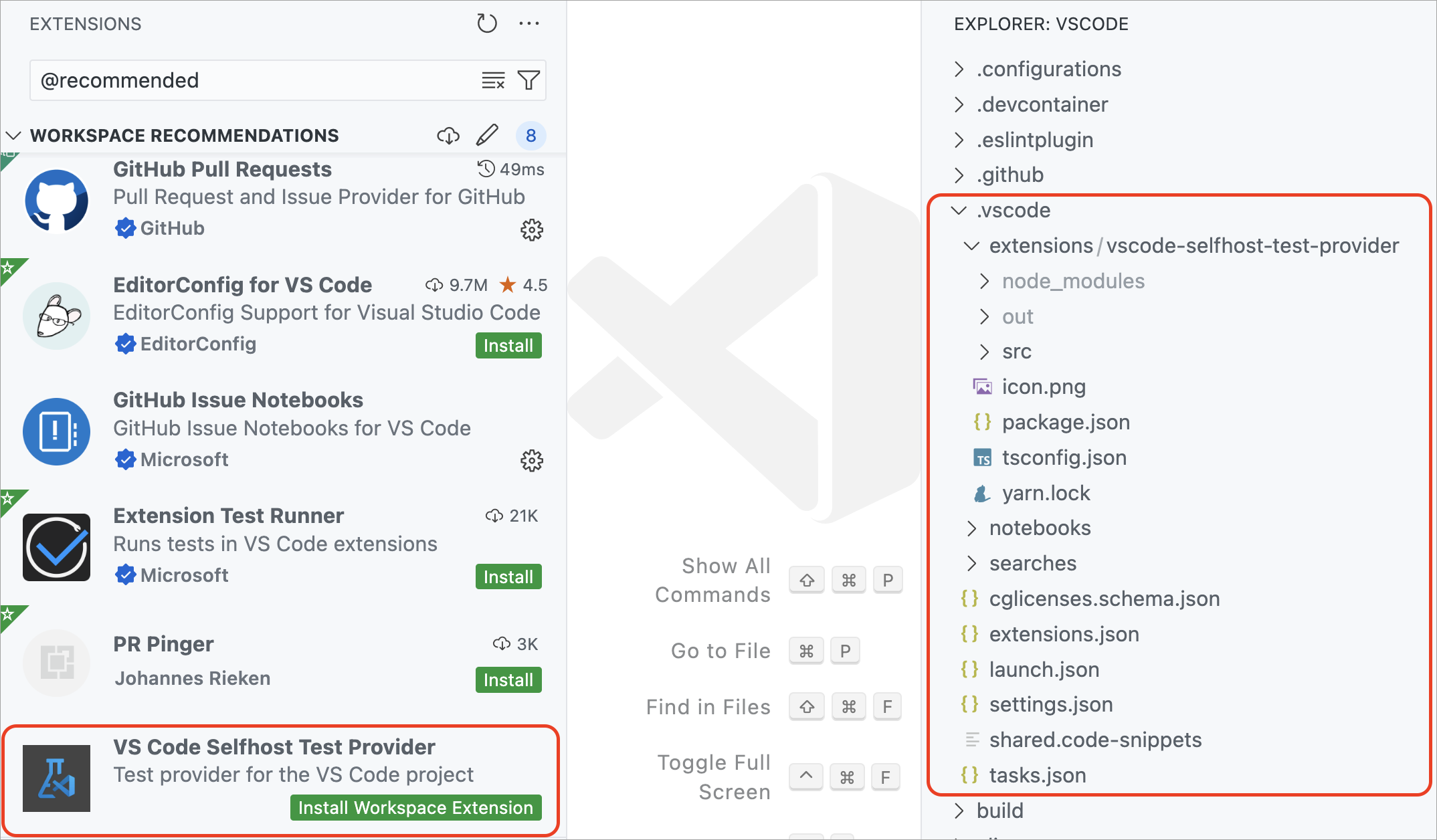

Local workspace extensions

Local workspace extensions, first introduced in the

VS Code 1.88 release

, is generally available. You can now include an extension directly in your workspace and install it only for that workspace. This feature is designed to cater to your specific workspace needs and provide a more tailored development experience.

To use this feature, you need to have your extension in the

.vscode/extensions

folder within your workspace. VS Code then shows this extension in the

Workspace Recommendations

section of the Extensions view, from where users can install it. VS Code installs this extension only for that workspace. A local workspace extension requires the user to trust the workspace before installing and running this extension.

For instance, consider the

vscode-selfhost-test-provider

extension in the

VS Code repository

. This extension plugs in test capabilities, enabling contributors to view and run tests directly within the workspace. Following screenshot shows the

vscode-selfhost-test-provider

extension in the Workspace Recommendations section of the Extensions view and the ability to install it.

Note that you should include the unpacked extension in the

.vscode/extensions

folder and not the

VSIX

file. You can also include only sources of the extension and build it as part of your workspace setup.

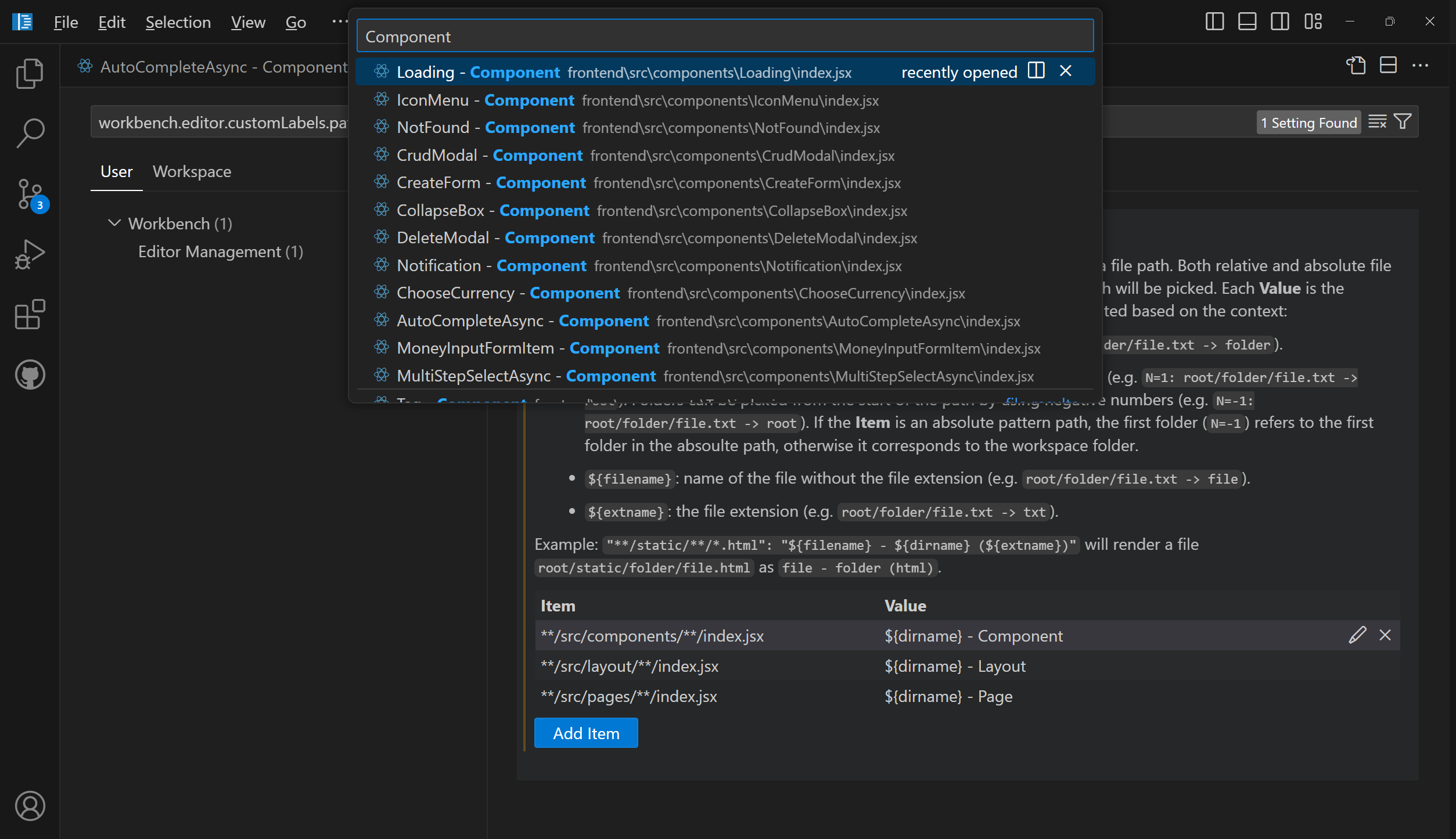

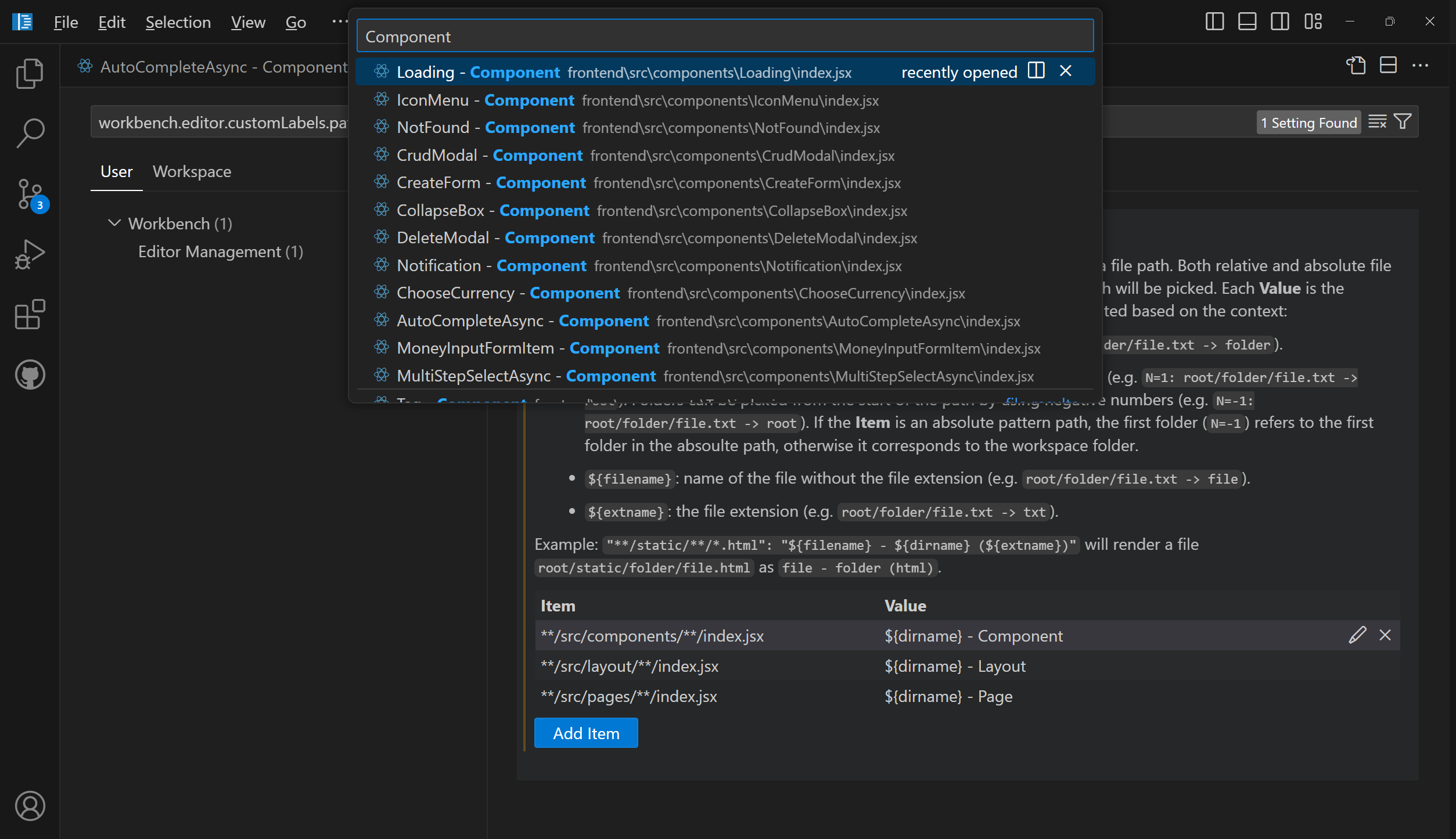

Custom Editor Labels in Quick Open

Last month, we introduced

custom labels

, which let you personalize the labels of your editor tabs. This feature is designed to help you more easily distinguish between tabs for files with the same name, such as

index.tsx

files.

Building on that, we've extended the use of custom labels to Quick Open (

⌘P

(Windows, Linux

Ctrl+P

)

). Now, you can search for your files using the custom labels you've created, making file navigation more intuitive.

Customize keybindings

We've made it more straightforward to customize keybindings for user interface actions. Right-click on any action item in your workbench, and select

Customize Keybinding

. If the action has a

when

clause, it's automatically included, making it easier to set up your keybindings just the way you need them.

Find in trees keybinding

We have addressed an issue where the Find control was frequently being opened unintentionally for a tree control. For example, when the Find control appears in the Explorer view instead of searching in the editor.

To reduce these accidental activations, we have changed the default keybinding for opening the Find control in a tree control to

⌥⌘F

(Windows, Linux

Ctrl+Alt+F

)

. If you prefer the previous setup, you can easily revert to the original keybinding for the

list.find

command using the Keyboard Shortcuts editor.

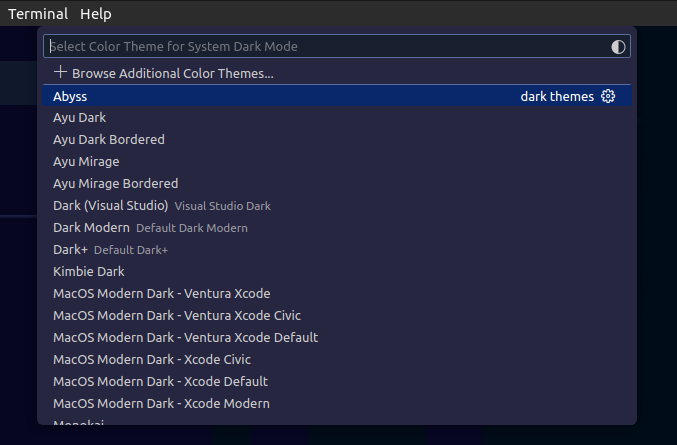

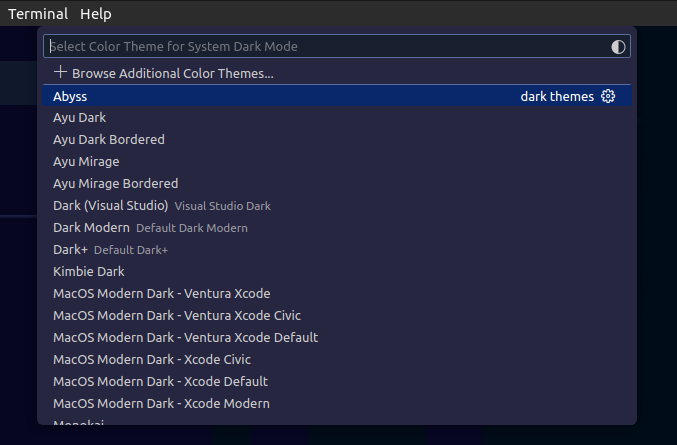

Auto detect system color mode improvements

If you wanted your theme to follow the color mode of your system, you could already do this by enabling the setting

window.autoDetectColorScheme

.

When enabled, the current theme is defined by the

workbench.preferredDarkColorTheme

setting when in dark mode, and the

workbench.preferredLightColorTheme

setting when in light mode.

In that case, the

workbench.colorTheme

setting is then no longer considered. It is only used when

window.autoDetectColorScheme

is off.

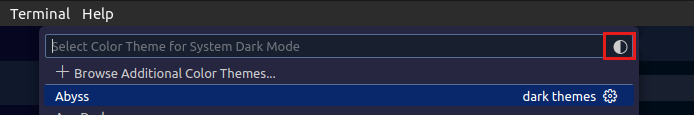

In this milestone, what's new is that the theme picker dialog (

Preferences: Color Theme

command) is now aware of the system color mode. Notice how the theme selection only shows dark themes when the system in in dark mode:

The dialog also has a new button to directly take you to the

window.autoDetectColorScheme

setting:

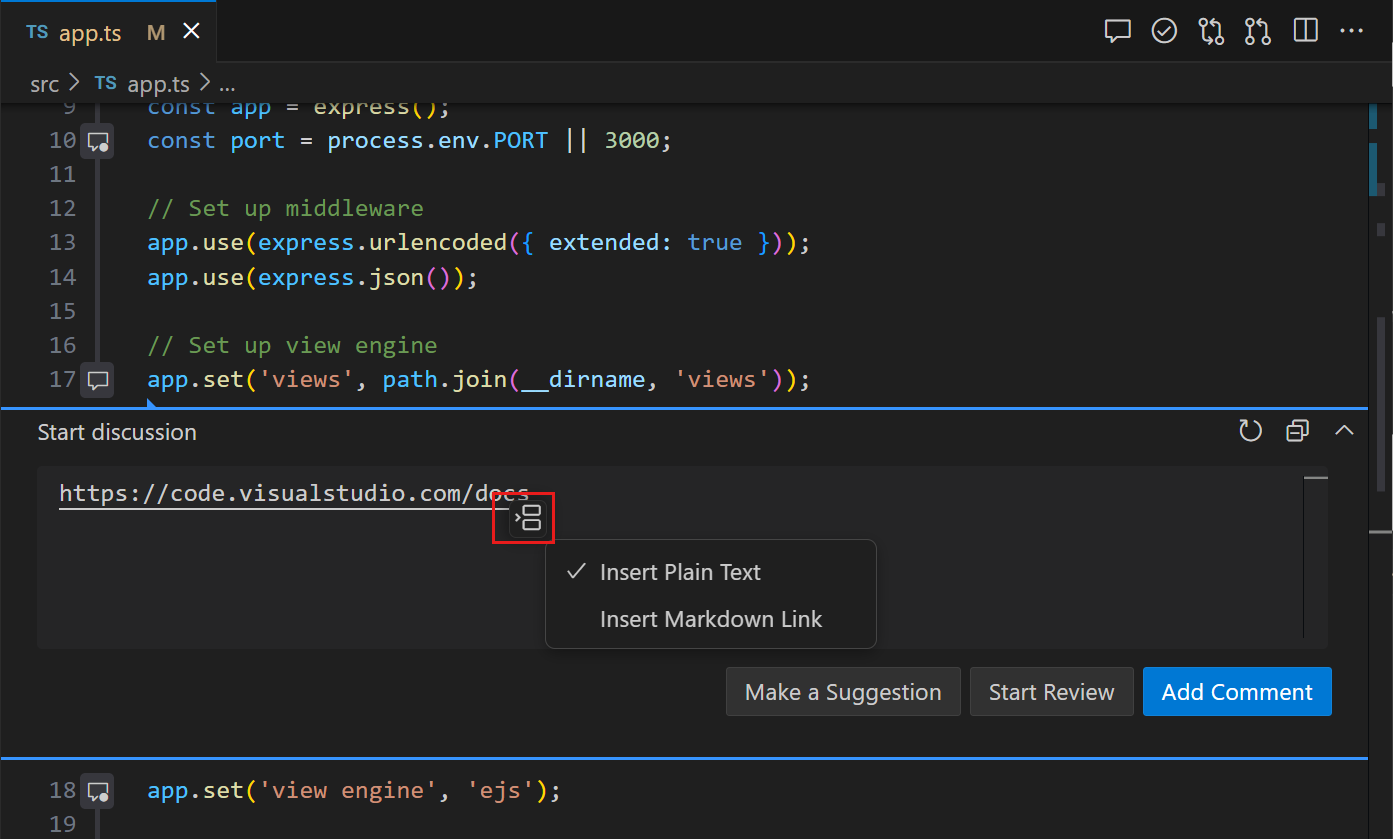

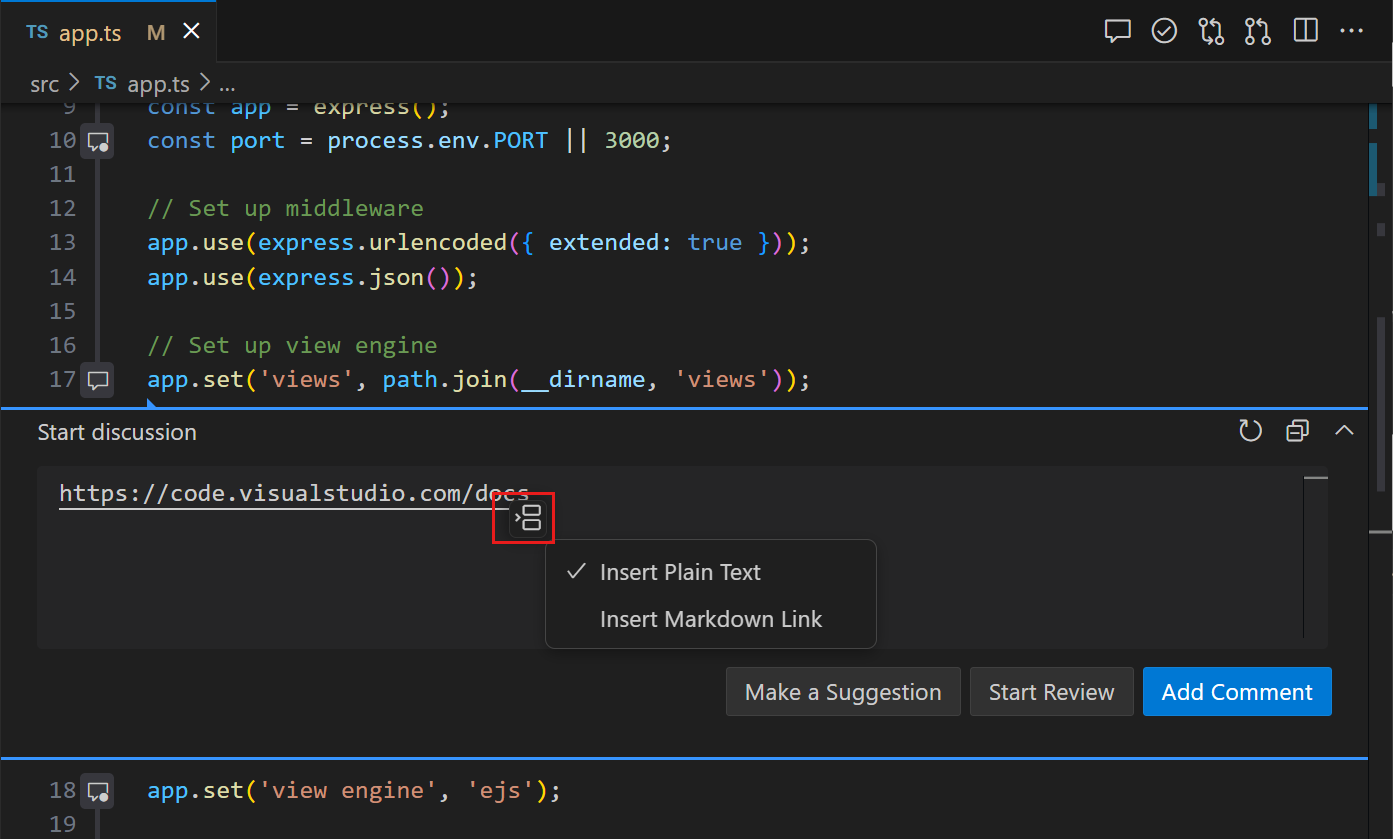

In the input editor of the Comments control, pasting a link has the same behavior as pasting a link in a Markdown file. The paste options are shown and you can choose to paste a Markdown link instead of the raw link that you copied.

Source Control

Save/restore open editors when switching branches

This milestone, we have addressed a long-standing feature request to save and restore editors when switching between source control branches. Use the

scm.workingSets.enabled

setting to enable this feature.

To control the open editors when switching to a branch for the first time, you can use the

scm.workingSets.default

setting. You select to have no open editors (

empty

), or to use the currently opened editors (

current

, the default value).

Dedicated commands for viewing changes

To make it easier to view specific types of changes in the multi-file diff editor, we have added a set of new commands to the command palette:

Git: View Staged Changes

,

Git: View Changes

, and

Git: View Untracked Changes

.

Notebooks

Minimal error renderer

You can use a new layout for the notebook error renderer with the setting

notebook.output.minimalErrorRendering

. This new layout only displays the error and message, and a control to expand the full error stack into view.

Disabled backups for large notebooks

Periodic file backups are now disabled for large notebook files to reduce the amount of time spent writing the file to disk. The limit can be adjusted with the setting

notebook.backup.sizeLimit

. We are also experimenting with an option to avoid blocking the renderer while saving the notebook file with

notebook.experimental.remoteSave

, so that auto-saves can occur without a performance penalty.

Over the past few months, we have received feedback about performance regressions in the notebook editor. The regressions are difficult to pinpoint and not easily reproducible. Thanks to the community for continuously providing logs and feedback, we could identify that the regressions are coming from the outline and sticky scroll features as we added new features to them. The issues have been fixed in this release.

We appreciate the community's feedback and patience, and we continue to improve Notebook Editor's performance. If you continue to experience performance issues, please don't hesitate to file a new issue in the

VS Code repo

.

Search

Quick Search

Quick Search enables you to quickly perform a text search across your workspace files. Quick Search is no longer experimental, so give it a try! ✨🔍

Theme:

Night Owl Light

(preview on

vscode.dev

)

Note that all Quick Search commands and settings no longer have the "experimental" keyword in their identifier. For example, the command ID

workbench.action.experimental.quickTextSearch

became

workbench.action.quickTextSearch

. This might be relevant if you have settings or keybindings that use these old IDs.

Search tree recursive expansion

We have a new context menu option that enables you to recursively open a selected tree node in the search tree.

Theme:

Night Owl Light

(preview on

vscode.dev

)

Git Bash shell integration enabled by default

Shell integration for Git Bash is now

automatically enabled

. This brings many features to Git Bash, such as

command navigation

,

sticky scroll

,

quick fixes

, and more.

On most Linux distributions, middle-click pastes the selection. Similar behavior can now be enabled on other operating systems by configuring

terminal.integrated.middleClickBehavior

to

paste

, which pastes the regular clipboard content on middle-click.

Expanded ANSI hyperlink support

ANSI hyperlinks made via the

OSC 8 escape sequence

previously supported only

http

and

https

protocols but now work with any protocol. By default, only links with the

file

,

http

,

https

,

mailto

,

vscode

and

vscode-insiders

protocols activate for security reasons, but you can add more via the

terminal.integrated.allowedLinkSchemes

setting.

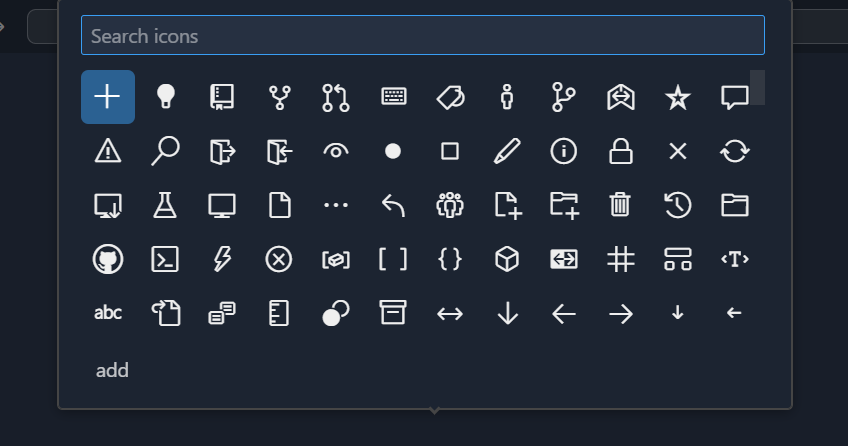

New icon picker for the terminal

Selecting the change icon from the terminal tab context menu now opens the new icon picker that was built for profiles:

Theme:

Sapphire

(preview on

vscode.dev

)

Support for window size reporting

The terminal now responds to the following escape sequence requests:

-

CSI 14 t

to report the terminal's window size in pixels

-

CSI 16 t

to report the terminal's cell size in pixels

-

CSI 18 t

to report the terminal's window size in characters

⚠️ Deprecation of the canvas renderer

The terminal features three different renderers: the DOM renderer, the WebGL renderer, and the canvas renderer. We have wanted to remove the canvas renderer for some time but were blocked by unacceptable performance in the DOM renderer and WebKit not implementing

webgl2

. Both of these issues have now been resolved!

This release, we removed the canvas renderer from the fallback chain so it's only enabled when the

terminal.integrated.gpuAcceleration

setting is explicitly set to

"canvas"

. We plan to remove the canvas renderer entirely in the next release. Please let us know if you have issues when

terminal.integrated.gpuAcceleration

is set to both

"on"

or

"off"

.

Debug

JavaScript Debugger

The JavaScript debugger now automatically looks for binaries that appear in the

node_modules/.bin

folder in the

runtimeExecutable

configuration. Now, it resolves them by name automatically.

Notice in the following example that you can just reference

mocha

, without having to specify the full path to the binary.

{

"name": "Run Tests",

"type": "node",

"request": "launch",

- "runtimeExecutable": "${workspaceFolder}/node_modules/.bin/mocha",

- "windows": {

- "runtimeExecutable": "${workspaceFolder}/node_modules/.bin/mocha.cmd"

- },

+ "runtimeExecutable": "mocha",

}

Languages

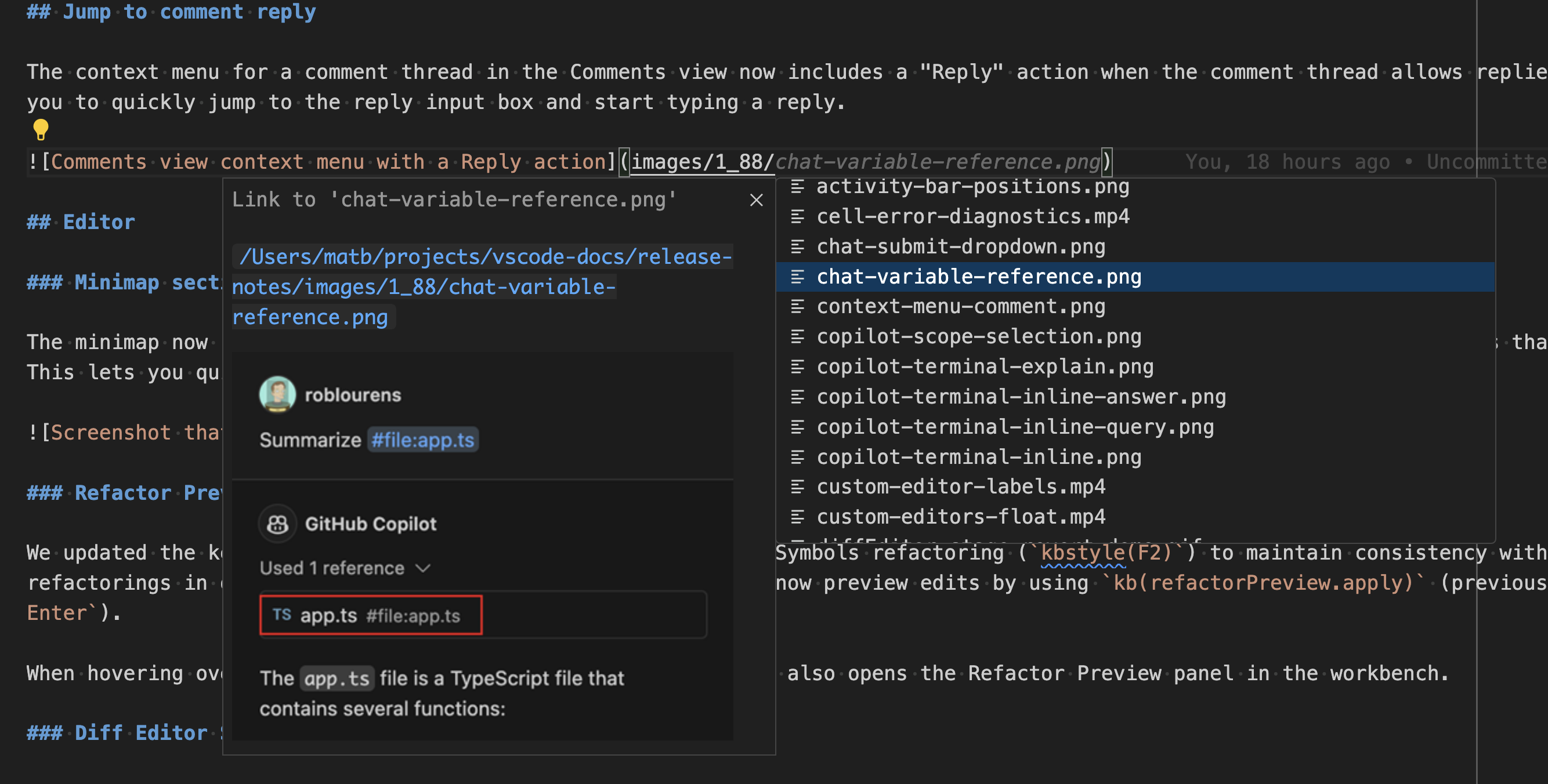

Image previews in Markdown path completions

VS Code's built-in Markdown tooling provides path completions for links and images in your Markdown. When completing a path to an image or video file, we now show a small preview directly in the

completion details

. This can help you find the image or video you're after more easily.

Hover to preview images and videos in Markdown

Want a quick preview of an image or video in some Markdown without opening the full

Markdown preview

? Now you can hover over an image or video path to see a small preview of it:

Did you know that VS Code's built-in Markdown support lets you rename headers using

F2

? This is useful because it also

automatically updates all links to that header

. This iteration, we improved handling of renaming in cases where a Markdown file has duplicated headers.

Consider the Markdown file:

# Readme

- [Example 1](#_example)

- [Example 2](#_example-1)

## Example

...

## Example

...

The two

## Example

headers have the same text but can each be linked to individually by using a unique ID (

#example

and

#example-1

). Previously, if you renamed the first

## Example

header to

## First Example

, the

#example

link would be correctly changed to

#first-example

but the

#example-1

link would not be changed. However,

#example-1

is no longer a valid link after the rename because there are no longer duplicated

## Example

headers.

We now correctly handle this scenario. If you rename the first

## Example

header to

## First Example

in the document above for instance, the new document will be:

# Readme

- [Example 1](#_first-example)

- [Example 2](#_example)

## First Example

...

## Example

...

Notice how both links have now been automatically updated, so that they both remain valid!

Remote Development

The

Remote Development extensions

, allow you to use a

Dev Container

, remote machine via SSH or

Remote Tunnels

, or the

Windows Subsystem for Linux

(WSL) as a full-featured development environment.

Highlights include:

You can learn more about these features in the

Remote Development release notes

.

Contributions to extensions

GitHub Copilot

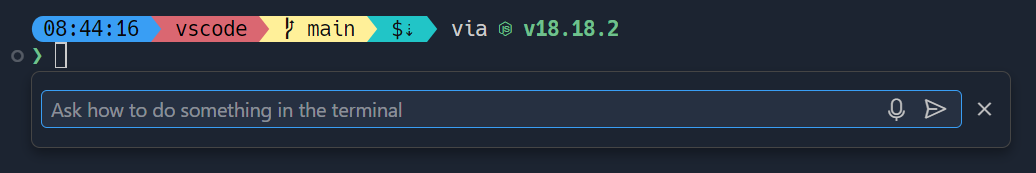

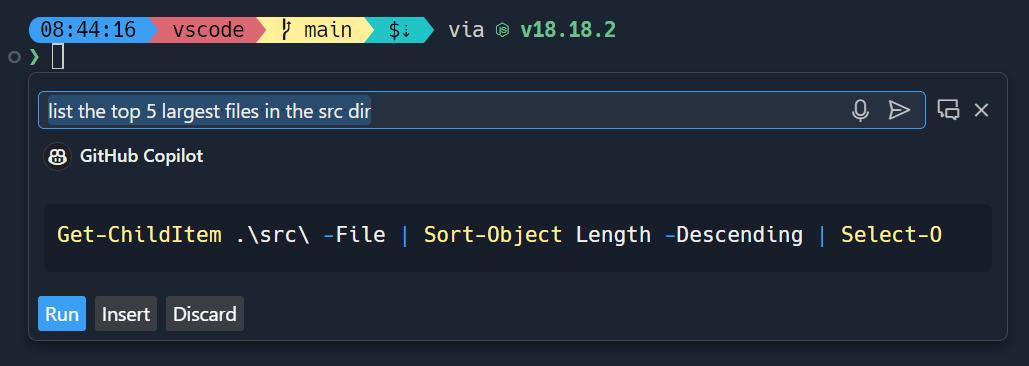

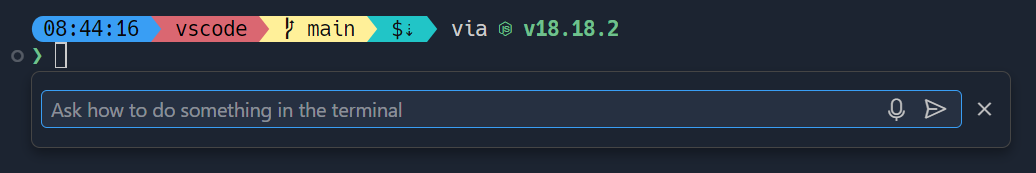

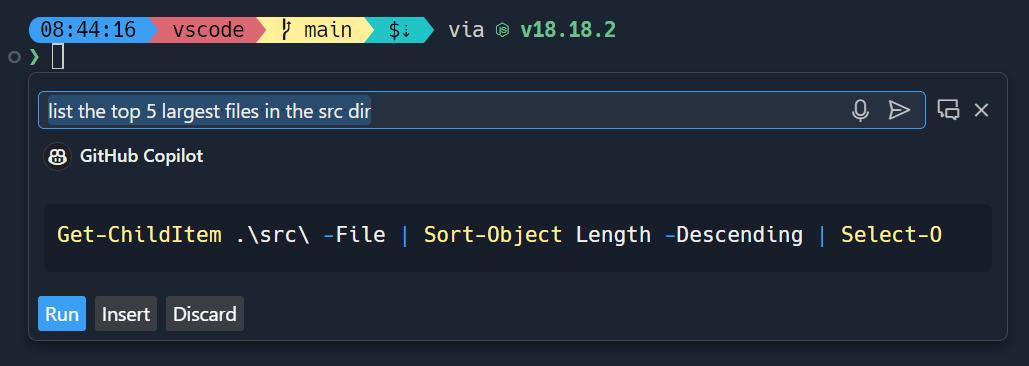

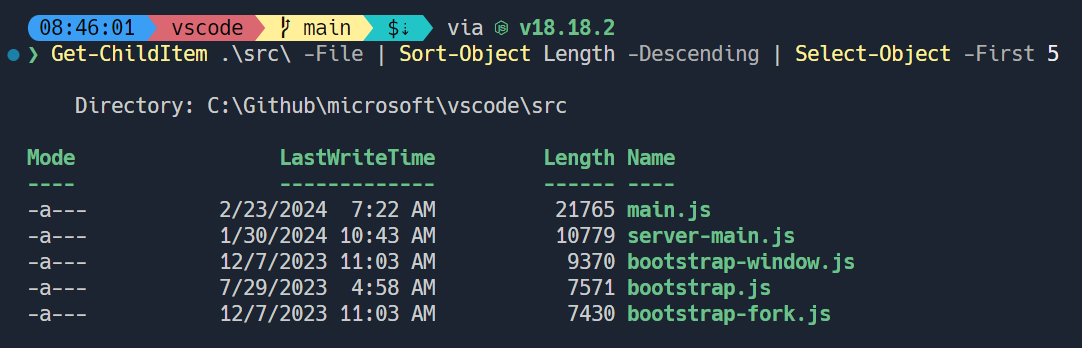

Terminal inline chat

Terminal inline chat is now the default experience in the terminal. Use the

⌘I

(Windows, Linux

Ctrl+I

)

keyboard shortcut when the terminal is focused to bring it up.

The terminal inline chat uses the

@terminal

chat participant, which has context about the integrated terminal's shell and its contents.

Once a command is suggested, use

⌘Enter

(Windows, Linux

Ctrl+Enter

)

to run the command in the terminal or

⌥Enter

(Windows, Linux

Alt+Enter

)

to insert the command into the terminal. The command can also be edited directly in Copilot's response before running it (currently

Ctrl+down

,

Tab

,

Tab

on Windows & Linux,

Cmd+down

,

Tab

,

Tab

on macOS).

Copilot-powered rename suggestions can now be triggered by using the sparkle icon in the rename control.

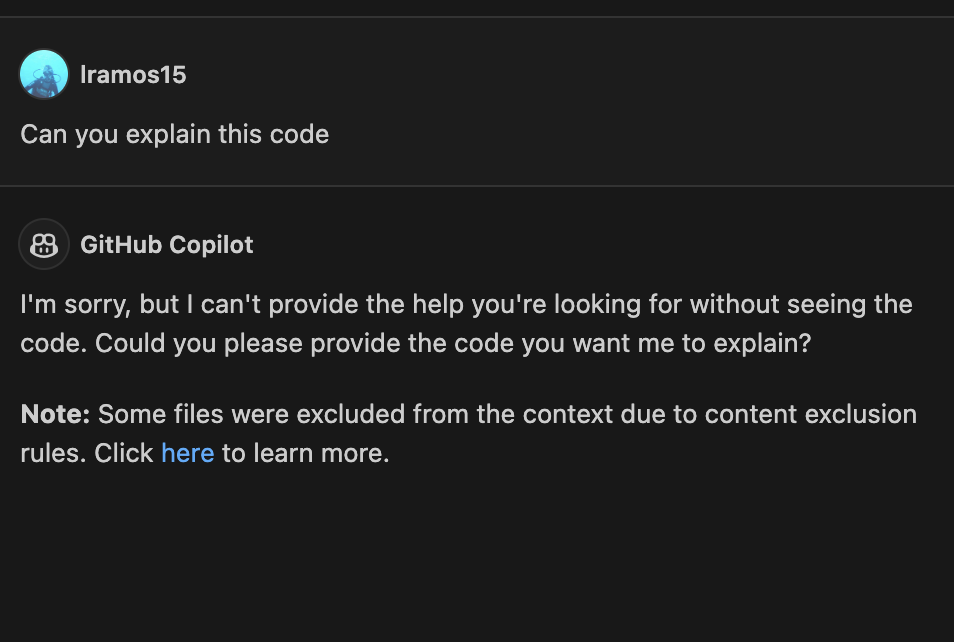

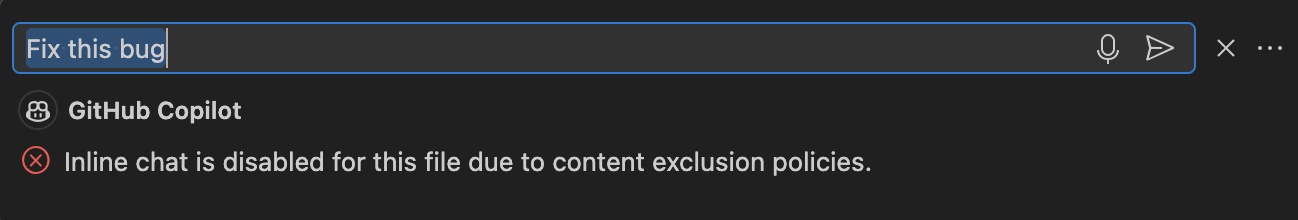

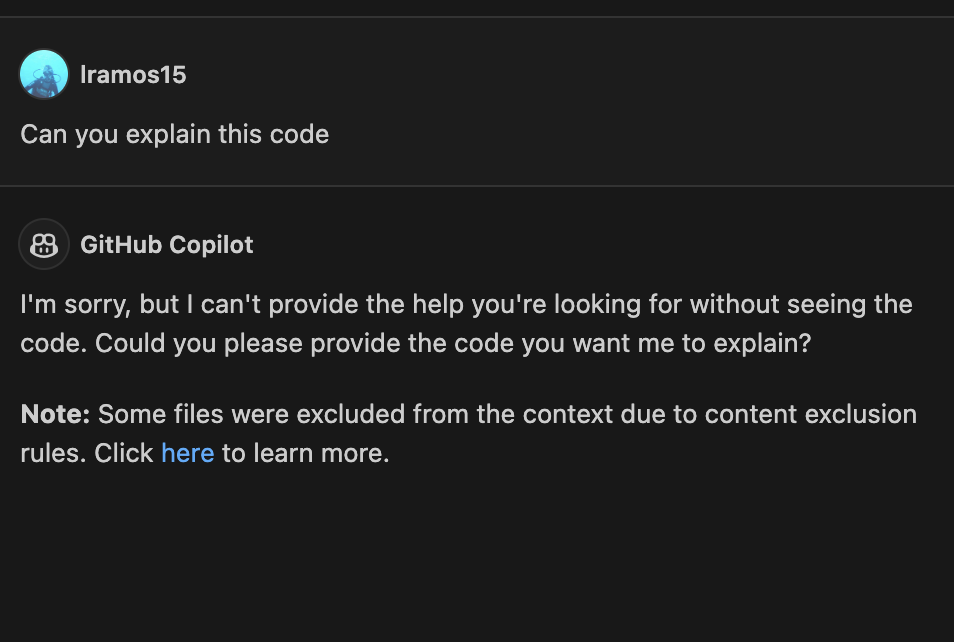

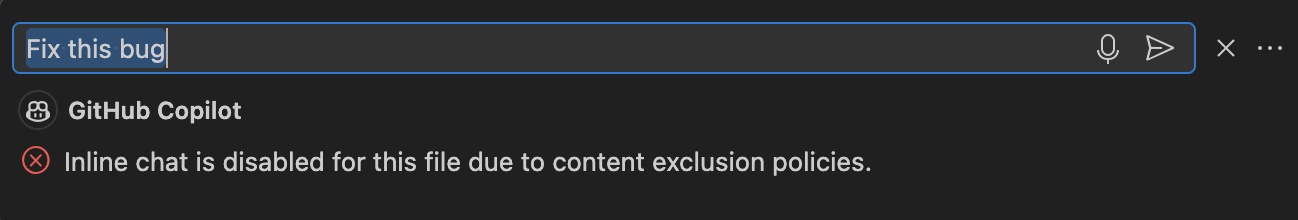

Content Exclusions

GitHub Copilot Content Exclusions is now supported in Copilot Chat for all Copilot for Business and Copilot Enterprise customers. Information on configuring content exclusions can be found on the

GitHub Docs

.

When a file is excluded by content exclusions, Copilot Chat is unable to see the contents or the path of the file, and it's not used in generating an LLM suggestion.

Preview: Generate in Notebook Editor

We now support inserting new cells with inline chat activated automatically in the notebook editor. We show a

Generate

button on the notebook toolbar and the insert toolbar between cells, when the

notebook.experimental.generate

setting is set to

true

. It can also be triggered by pressing

Cmd+I

on macOS (or

Ctrl+I

on Windows/Linux), when the focus is on the notebook list or cell container. This feature can help simplify the process of generating code in new cells with the help of the language model.

Python

"Implement all inherited abstract classes" code action

Working with abstract classes is now easier when using Pylance. When defining a new class that inherits from an abstract one, you can now use the

Implement all inherited abstract classes

code action to automatically implement all abstract methods and properties from the parent class:

Theme:

Catppuccin Macchiato

(preview on

vscode.dev

)

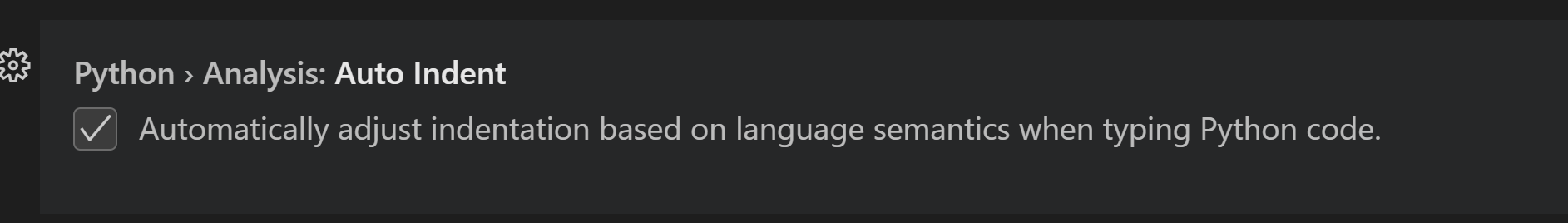

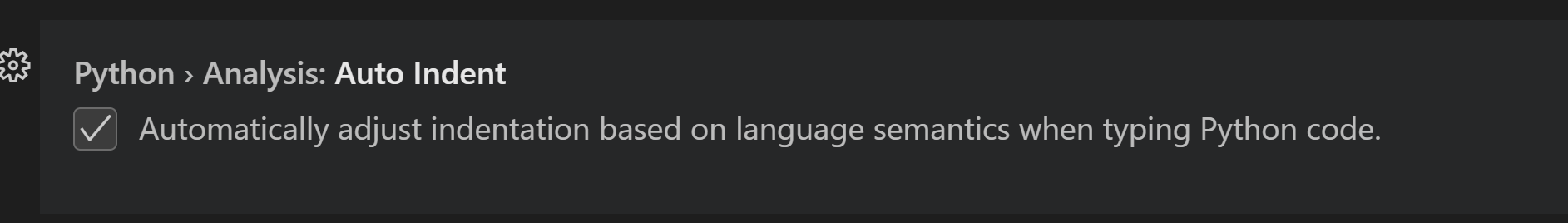

New auto indentation setting

Previously, Pylance's auto indentation behavior was controlled through the

editor.formatOnType

setting, which used to be problematic if you wanted to disable auto indentation, but enable format on type with other supported tools. To solve this problem, Pylance has its own setting to control its auto indentation behavior:

python.analysis.autoIndent

, which is enabled by default.

Debugpy removed from the Python extension in favor of the Python Debugger extension

Now that debugging functionality is handled by the

Python Debugger

extension, we have removed debugpy from the Python extension.

As part of this change,

"type": "python"

and

"type": "debugpy"

specified in your

launch.json

file will both reference the path to the Python Debugger extension, requiring no changes needed to your

launch.json

files in order to run and debug effectively. Moving forward, we recommend using

"type": "debugpy"

as this directly corresponds to the Python Debugger extension.

Socket disablement now possible during testing

You can now run tests with socket disablement from the testing UI on the Python Testing Rewrite. This is made possible by a switch in the communication between the Python extension and the test run subprocess to now use named-pipes.

Minor testing bugs updated

Test view now displays projects using testscenarios with unittest and parameterized tests inside nested classes correctly. Additionally, the Test explorer now handles tests in workspaces with symlinks, specifically workspace roots that are children of symlink-ed paths, which is particularly helpful in WSL scenarios.

The Pylance team has been receiving feedback that Pylance's performance has degraded in the past few releases. We have made several smaller improvements in memory consumption and indexing performance to address various reported issues. However, for those who might still be experiencing performance issues with Pylance, we are kindly requesting for issues to be filed through the

Pylance: Report Issue

command from the Command Palette, ideally with logs, code samples and/or the packages that are installed in the working environment.

Hex Editor

The hex editor now has an

insert

mode, in addition to its longstanding "replace" mode. The insert mode enables new bytes to be added within and at the end of files, and it can be toggled using the

Insert

key or from the status bar.

The hex editor now also shows the currently hovered byte in the status bar.

GitHub Pull Requests

There has been more progress on the

GitHub Pull Requests

extension, which enables you to work on, create, and manage pull requests and issues. New features include:

-

Experimental conflict resolution for non-checked out PRs is available when enabled by the hidden setting

"githubPullRequests.experimentalUpdateBranchWithGitHub": true

. This feature enables you to resolve conflicts in a PR without checking out the branch locally. The feature is still experimental and will not work in all cases.

-

There's an Accessibility Help Dialog that shows when

Open Accessibility Help

is triggered from the Pull Requests and Issues views.

-

All review action buttons show in the Active Pull Request sidebar view when there's enough space.

Review the

changelog for the 0.88.0

release of the extension to learn about the other highlights.

TypeScript

File watching handled by VS Code core

A new experimental setting

typescript.tsserver.experimental.useVsCodeWatcher

controls if the TS extension is using VS Code's core file watching support for file watching needs. TS makes extensive use of file watching, usually with their own node.js based implementation. By using VS Code's file watcher, watching should be more efficient, more reliable, and consume less resources. We plan to gradually enable this feature for users in May and monitor for regressions.

Preview Features

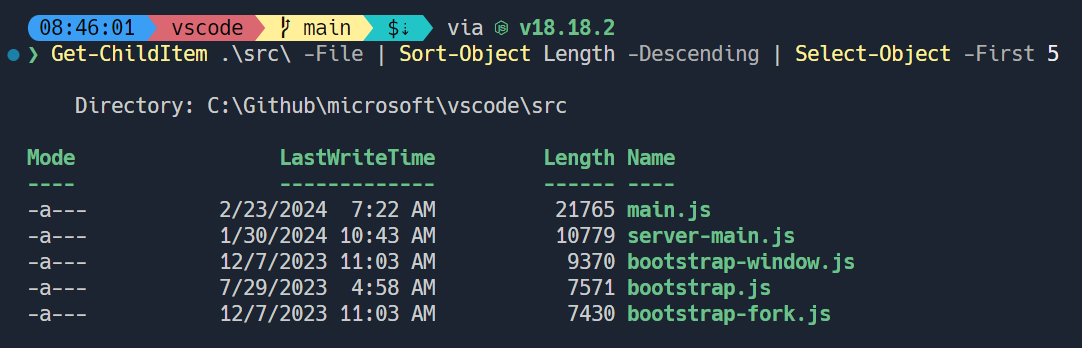

VS Code-native intellisense for PowerShell

We've had a prototype for PowerShell intellisense inside the terminal for some time now, that we only recently got some more time to invest in polishing up. This is what it looks like:

Currently, it triggers on the

-

character or when ctrl+space is pressed. To enable this feature, set

"terminal.integrated.shellIntegration.suggestEnabled": true

in your

settings.json

file (it won't show up in the settings UI currently).

It's still early for this feature but we'd love to hear your feedback on it. Some of the bigger things we have planned for it are to make triggering it more reliable (

#211222

), make the suggestions more consistent regardless of where the popup is triggered (

#211364

), and bringing the experience as close to the editor intellisense experience as possible (

#211076

,

#211194

).

Automatic Markdown link updates on paste

Say, you're writing some Markdown documentation and you realize that one section of the doc actually belongs somewhere else. So, you copy and paste it over into another file. All good, right? Well if the copied text contained any relative path links, reference links, or images, then these will likely now be broken, and you'll have to fix them up manually. This can be a real pain, but thankfully the new Update Links on Paste is here to help!

To enable this functionality, just set

"markdown.experimental.updateLinksOnPaste": true

. Once enabled, when you copy and paste text between Markdown files in the current editor, VS Code automatically fixes all relative path links, reference links, and all images/videos with relative paths.

After pasting, if you realize that you instead want to insert the exact text you copied, you can use the paste control to switch back to normal copy/paste behavior.

Support for TypeScript 5.5

We now support the TypeScript 5.5 beta. Check out the

TypeScript 5.5 beta blog post

and

iteration plan

for details on this release.

Editor highlights include:

-

Syntax checks for regular expressions.

-

File watching improvements.

To start using the TypeScript 5.5 beta, install the

TypeScript Nightly extension

. Please share feedback and let us know if you run into any bugs with TypeScript 5.5.

API

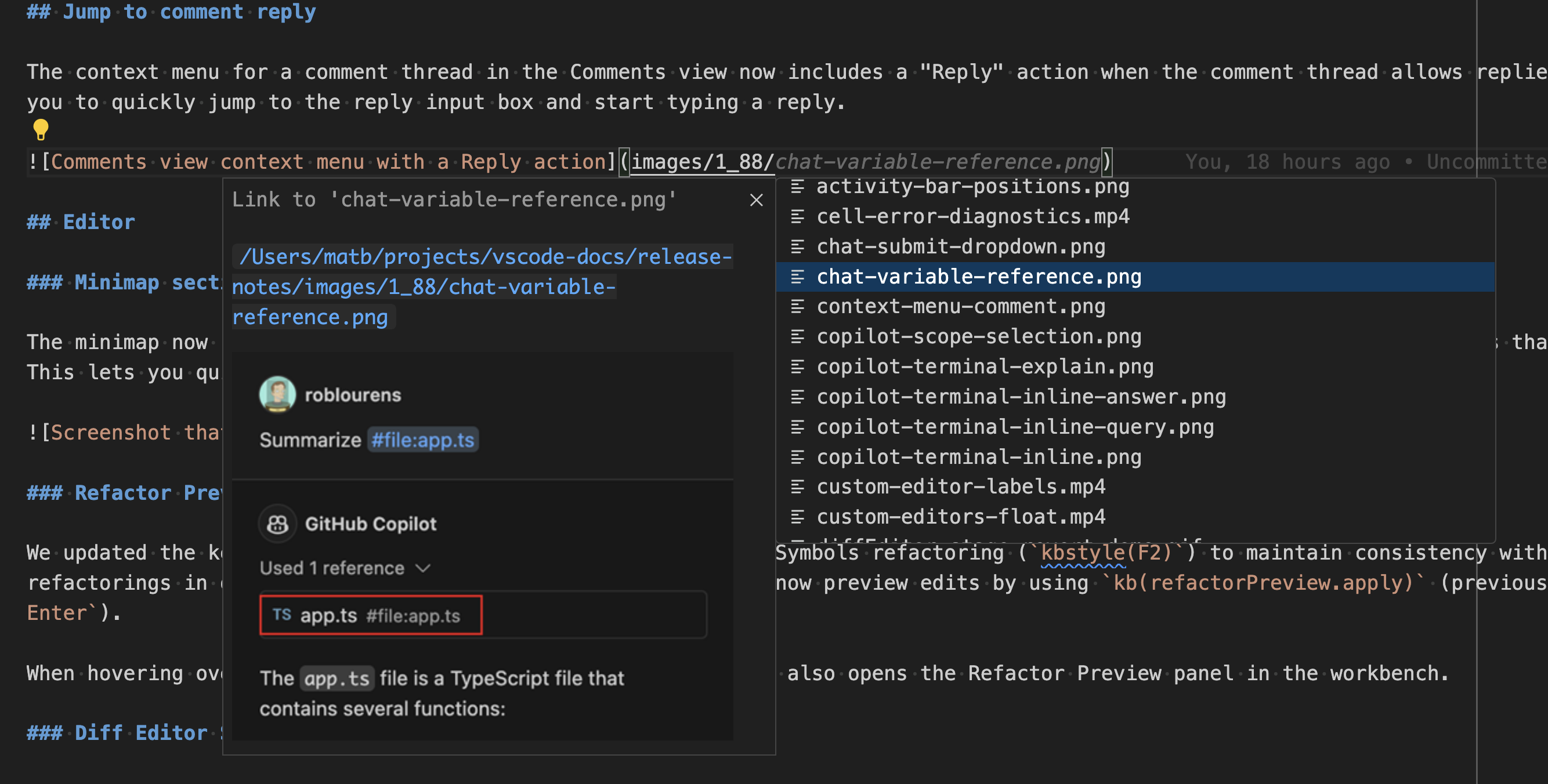

When writing a new comment, VS Code creates a stripped down text editor, which is backed by a

TextDocument

, just like the main editors in VS Code are. This iteration, we've enabled some additional API features in these comment text editors. This includes:

-

Support for workspace edits.

-

Support for diagnostics.

-

Support for the paste-as proposed API.

Comment text documents can be identified by a URI that has the

comment

scheme.

We're looking forward to seeing what extensions build with this new functionality!

Finalized Window Activity API

The

window activity API

has been finalized. This API provides a simple additional

WindowState.active

boolean that extensions can use to determine if the window has recently been interacted with.

vscode.window.onDidChangeWindowState(e => console.log('Is the user active?', e.active));

Proposed APIs

Accessibility Help Dialog for a view

An Accessibility Help Dialog can be added for any extension-contributed view via the

accessibilityHelpContent

property. With focus in the view, screen reader users hear a hint to open the dialog (

⌥F1

(Windows

Alt+F1

, Linux

Shift+Alt+F1

)

), which contains an overview and helpful commands.

This API is used by the GitHub Pull Request extension's Issues and PR views.

Language model and Chat API

The language model namespace (

vscode.lm

) exports new functions to retrieve language model information and to count tokens for a given string. Those are

getLanguageModelInformation

and

computeTokenLength

respectively. You should use these functions to build prompts that are within the limits of a language model.

Note

: inline chat is now powered by the upcoming chat particpants API. This also means

registerInteractiveEditorSessionProvider

is deprecated and will be removed very soon.

Updated document paste proposal

We've continued iterating on the

document paste proposed API

. This API enables extensions to hook into copy/paste operations in text documents.

Notable changes to the API include:

-

A new

resolveDocumentPasteEdit

method, which fills in the edit on a paste operation. This should be used if computing the edit takes a long time as it is only called when the paste edit actually needs to be applied.

-

All paste operations now are identified by a

DocumentDropOrPasteEditKind

. This works much like the existing

CodeActionKind

and is used in keybindings and settings for paste operations.

The

document paste extension sample

includes all the latest API changes, so you can test out the API. Be sure to share feedback on the changes and overall API design.

Hover Verbosity Level

This iteration we have added a new proposed API to contract/expand hovers, which is called

editorHoverVerbosityLevel

. It introduces a new type called the

VerboseHover

, which has two boolean fields:

canIncreaseHoverVerbosity

and

canDecreaseHoverVerbosity

, which signal that a hover verbosity can be increased or decreased. If one of them is set to true, the hover is displayed with

+

and

-

icons, which can be used to increase/decrease the hover verbosity.

The proposed API also introduces a new signature for the

provideHover

method, which takes an additional parameter of type

HoverContext

. When a hover verbosity request is sent by the user, the hover context is populated with the previous hover, as well as a

HoverVerbosityAction

, which indicates whether the user would like to increase or decrease the verbosity.

preserveFocus

on Extension-triggered TestRuns

There is

a proposal

for a

preserveFocus

boolean on test run requests triggered by extensions. Previously, test runs triggered from extension APIs never caused the focus to move into the

Test Results

view, requiring some extensions to reinvent the wheel to maintain user experience compatibility. This new option can be set on

TestRunRequest

s, to ask the editor to move focus as if the run was triggered from in-editor.

Notable fixes

-

209917

Aux window: restore maximised state (Linux, Windows)

Thank you

Last but certainly not least, a big

Thank You

to the contributors of VS Code.

Issue tracking

Contributions to our issue tracking:

Pull requests

Contributions to

vscode

:

Contributions to

vscode-css-languageservice

:

Contributions to

vscode-emmet-helper

:

Contributions to

vscode-eslint

:

Contributions to

vscode-hexeditor

:

Contributions to

vscode-json-languageservice

:

Contributions to

vscode-languageserver-node

:

Contributions to

vscode-python-debugger

:

Contributions to

vscode-vsce

:

Contributions to

language-server-protocol

:

Contributions to

monaco-editor

: